4 Steps to Mitigate Algorithmic Bias

Amid the steady rise in the use of artificial intelligence (AI) in health care, a groundswell of concern has surfaced about the potential for algorithmic bias to potentially impact safety, equity of care and treatment decisions.

In its first global report on AI, the World Health Organization recently cited concerns about algorithmic bias and the potential to misuse the technology and cause harm.

Opportunities for improvement are linked to challenges and risks, the report notes, including unethical collection and use of health data; biases encoded in algorithms, and risks of AI to patient safety, cybersecurity and the environment. Biased algorithms in health care also can influence clinical care, operational workflows and policy.

Earlier this year, the Federal Trade Commission advised companies not to implement AI tools that could unintentionally result in discrimination. And the Department of Health & Human Services’ Agency for Healthcare Research and Quality this year issued a request for information on algorithms that could introduce racial or ethnic bias into care delivery.

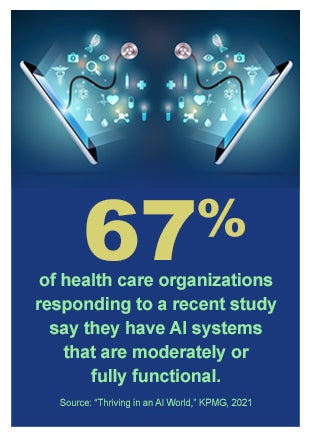

These concerns come at a critical juncture in AI’s deployment in the field. Ever-growing numbers of hospitals and health systems are implementing AI to carry out such crucial tasks as triaging patients, predicting the likelihood of developing diabetes and other diseases, and detecting patients who may need more help managing their medical conditions.

These concerns come at a critical juncture in AI’s deployment in the field. Ever-growing numbers of hospitals and health systems are implementing AI to carry out such crucial tasks as triaging patients, predicting the likelihood of developing diabetes and other diseases, and detecting patients who may need more help managing their medical conditions.

In fact, 67% of health care organizations responding to a KPMG survey released in April say they have AI systems that are moderately or fully functional.

As for what can be done to identify and address algorithmic bias, researchers from the University of Chicago Booth School’s Center for Applied Artificial Intelligence in June released a playbook to help hospitals and health systems.

4 Steps to Audit for Algorithmic Bias

STEP 1: Inventory algorithms.

List all the algorithms being used or developed in your organization. Designate a steward to maintain and update the inventory. The steward should have oversight on broad strategic decisions (i.e., member of the C-suite) and closely collaborate with a diverse committee of internal and external stakeholders.

STEP 2: Screen each algorithm for bias.

Think of this step as a debugging process for algorithms. Assess inputs and outputs of each algorithm and whether they are susceptible to or demonstrate bias. Pay close attention to whether proxies that an algorithm uses could introduce bias. Articulate the algorithm’s ideal target vs. its actual target.

STEP 3: Retrain biased algorithms.

Improve or suspend the use of biased algorithms. If bias is detected in an algorithm, find a way to improve it, such as retraining it with more data or predicting a slightly different outcome.

STEP 4: Prevention.

Establish structures and protocols for ongoing bias mitigation and set up a permanent team to uphold those protocols. Develop a pathway for reporting algorithmic bias concerns and requirements for documenting algorithms.